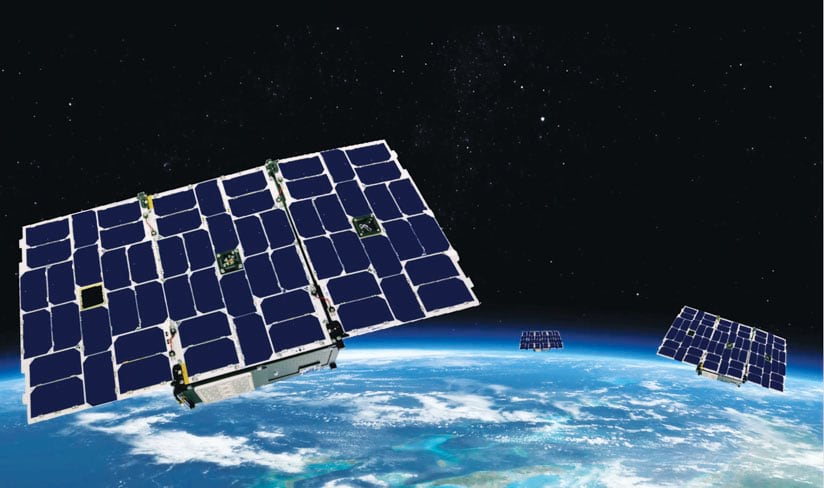

GeoOptics is putting a constellation of nanosatellites into space.

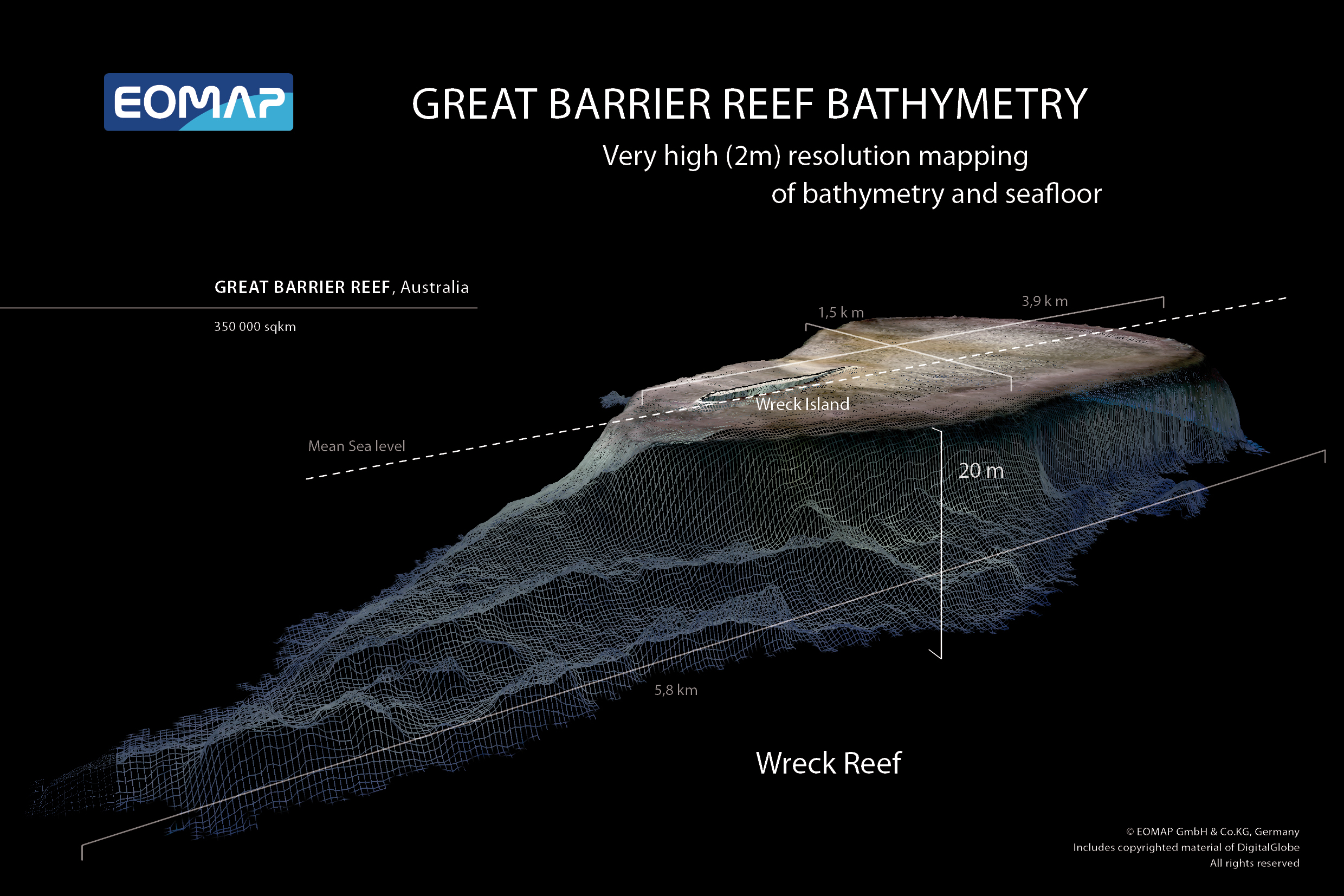

Radio signals collected by these satellites yield 100- to 500-meter vertical resolution.

From ESRI

Remote sensing, weather forecasting, and climate science are undergoing a drastic change.

Nanosatellites, which cost much less to produce and launch than traditional satellites, can now collect vast amounts of extremely accurate atmospheric data using a technique called radio occultation.

This data collection method wields GPS to monitor all sorts of variables between the earth’s surface and the top of the stratosphere—from atmospheric refractivity to moisture, pressure, and temperature.

The pioneer behind this science is Dr. Thomas Yunck, a leading expert on GPS and the founder, chairman, and chief technology officer of a startup called

GeoOptics.

“This is a huge revolution,” said Yunck, who first looked into using GPS for science in 1979 while working as an engineer at the National Aeronautics and Space Administration’s (NASA) Jet Propulsion Laboratory (JPL).

He wrote the first-ever proposal to use GPS signals to measure the earth’s atmosphere from space in 1988, and he has been deeply involved in developing and validating this technology ever since.

“It’s disruptive technology that could replace the traditional, old, slow way of collecting modern, high-resolution weather data,” said Lawrie Jordan, Esri’s director of imagery and remote sensing.

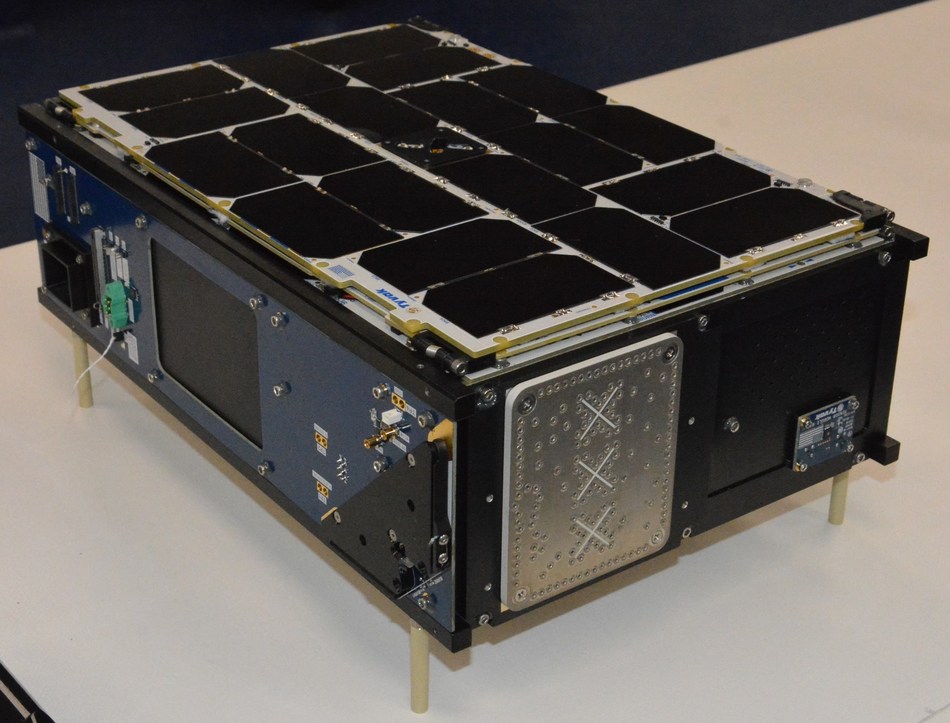

Tyvak Nano-Satellite Systems Inc. CICERO 6U Nanosatellite for GeoOptics

Picture Courtesy: CISION PR Newswire

An Ideal Solution to a Lot of Problems

Forty years ago, when GPS was brand-new, Yunck looked into how to apply the technology to science.

“We were using it to support deep space navigation at JPL, so we were investigating it for that purpose,” Yunck recalled.

After studying GPS for what he believes was about two weeks, he wrote an unassuming report stating that it was promising technology for the future.

“Little did I know that one day, it would consume my life,” he said.

“As time went on, we found that GPS was essentially an ideal solution to a lot of problems: navigating spacecraft, determining the locations of points on the ground, determining Earth’s rotation, and much more.

All these things could be addressed extremely efficiently with GPS.”

Throughout the 1980s, he and his colleagues at JPL devised a variety of precise techniques for using GPS to help map the topography of the ocean’s surface and measure tectonic plate motion, which had never been observed before.

The applications for GPS grew even further in the 1990s, and Yunck kept devising new ways to use the technology.

“It occurred to me that we could use GPS receivers on satellites to observe GPS signals passing through the atmosphere,” he said.

“If we could point antennas on satellites at the horizon, we could watch signals rise and set in the atmosphere, and we could observe the atmosphere itself.

This was a rather novel idea for GPS.”

It would also turn out to be an entirely different method for measuring the atmosphere, leading to more precise weather forecasting and studies on climate change.

NASA funded the early development of this idea, and that started the worldwide discipline for radio occultation.

But the technology is highly disruptive, especially now.

Shrinking Technology and Shifting the Paradigm

While Yunck and his colleagues at JPL were developing radio occultation, technological devices were shrinking physically.

“A cell phone is basically what, 20 years ago, would have been a super computer in a room,” said Yunck.

“Cell phones, computers, and other consumer-type devices like flat-screen TVs—they’ve all gotten a lot smaller and/or cheaper.”

The same kind of technology that facilitated the compression of consumer devices is now making it possible to build very powerful satellites that weigh 10 kilograms, have a volume of 6 liters, and cost under $1 million to build and a few hundred thousand dollars to launch.

“It’s that kind of technology that allows us to miniaturize spacecraft that used to be as big as a bus or a minivan and cost a billion dollars to build,” explained Yunck.

But the aerospace industry has been slow to get on board with miniaturization, mostly because government agencies have always had vertical control over space-based data collection—from developing the technology and getting it into orbit to gathering the data and using it.

In 2005, Yunck realized that the revolution he was trying so hard to push would have to materialize from the outside.

He got a few colleagues on board and formed GeoOptics, a small, private company based in Pasadena, California.

“We’re overturning a decades-long paradigm of having many government-owned assets in space and shifting to privately owned assets, where small companies are gathering, generating, and delivering data to governments,” said Yunck.

“Over the next 10 to 15 years, everything about remote sensing is going to change.”

Much More Accurate Atmospheric Data

Since its founding, GeoOptics has faced some technological and financial difficulties.

But the company now has three operational nanosatellites in orbit and, as of November 2018, is under contract to deliver its science-quality radio occultation data to the National Oceanic and Atmospheric Administration (NOAA) and the US Air Force.

“In the last couple years, they have come around to the concept of buying data from a private company because it’s much more cost-effective,” said Yunck.

Working with another Southern California-based startup, Tyvak, which builds the nanosatellites, Yunck and his team aim to put a group of satellites into space.

They call this the CICERO constellation, which stands for Community Initiative for Continuous Earth Remote Observation.

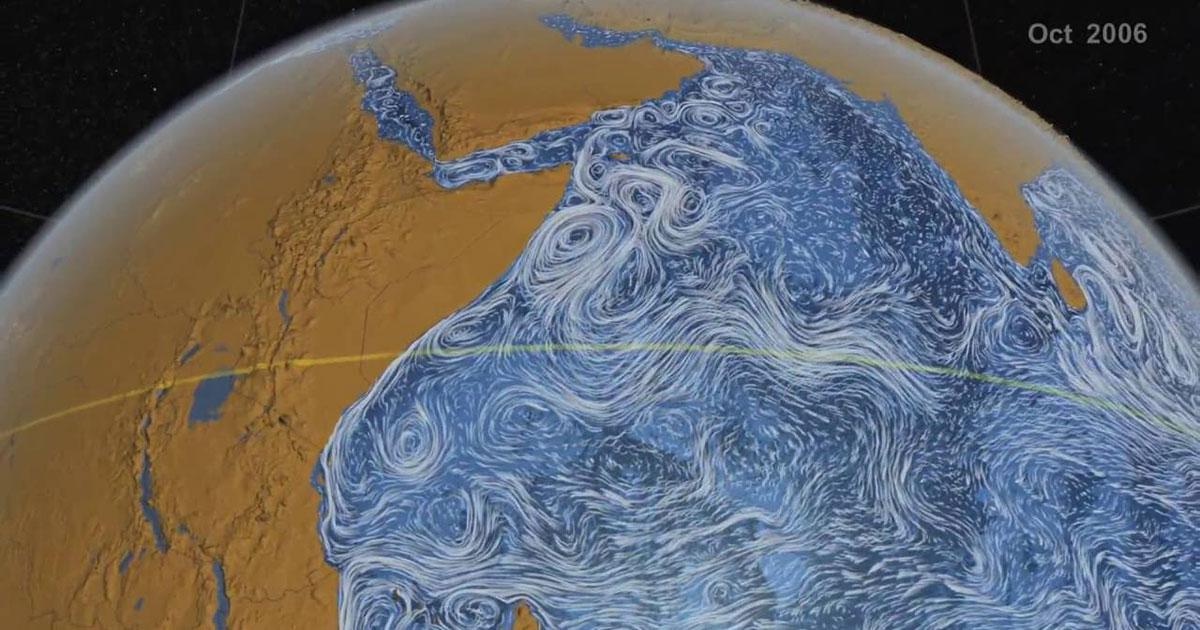

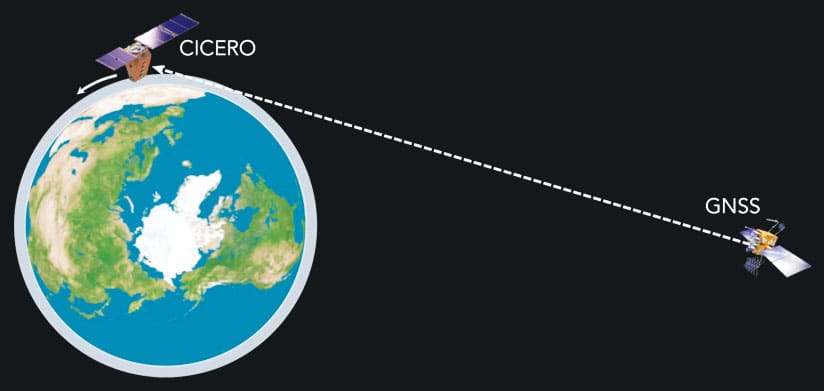

Radio signals originate at very high-altitude Global Navigation Satellite System (GNSS) satellites, pass through the atmosphere, and are received by much lower-altitude CICERO satellites.

As CICERO satellites move in orbit, they see the radio signal pass from the top of the atmosphere down to the surface before disappearing.

“I expect that within 10 years, we will have over 100 satellites,” said Yunck.

“That’s going to change the world.”

Using radio occultation, these nanosatellites produce better data than typical satellites, according to Yunck.

They collect active radio signals passing through the atmosphere to detect the atmosphere’s refractivity.

This lets scientists observe atmospheric density, pressure, temperature, and moisture.

They can also derive information about high-altitude winds from that data.

A radio signal collected by a CICERO satellite goes from the earth’s surface to the top of the atmosphere in about 60 seconds and yields 100- to 500-meter vertical resolution.

This allows them to measure over 150 levels of the atmosphere—far more than bigger instruments, such as radiometers, which typically measure 6 to 10 levels.

“It’s very high-resolution, high-frequency data,” said Jordan.

“It’s much more accurate, which will improve weather forecasts.”

“These satellites will have a direct impact on all the regimes of weather forecasting, such as 1- to 15-day weather forecasts, seasonal and longer climate forecasts, and severe storm forecasts,” said Yunck.

When monitoring a hurricane, for example, knowing the atmospheric pressure and moisture levels is critical to determining where the tropical storm is heading and how strong it will be when it makes landfall.

More accurate data will allow forecasters to make more specific predictions much farther in advance.

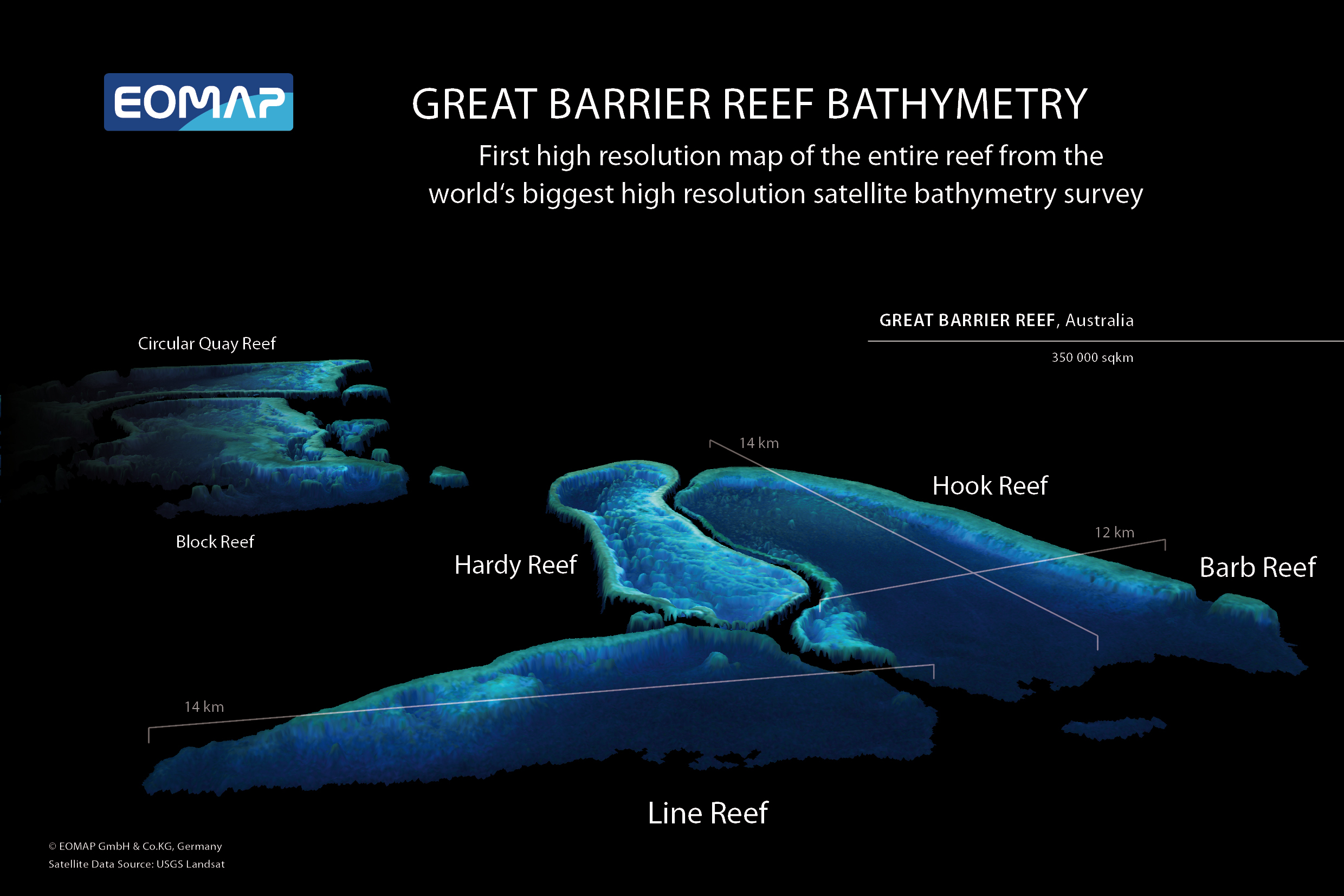

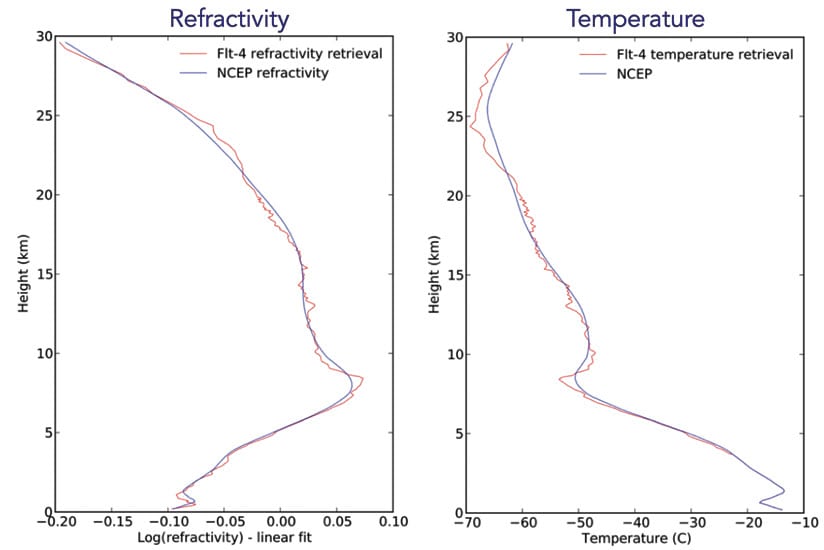

The red line shows atmospheric measurements made by CICERO satellites, while the blue line reflects the data model used by the National Oceanic and Atmospheric Administration (NOAA).

The two lines essentially match, but the measurements taken with the nanosatellites are much more detailed and precise.

It is also more useful for assessing how weather patterns change over time—i.e., climate change.

Currently, indicators of rising temperatures around the globe are derived from measurements taken on the earth’s surface.

While that’s useful, Yunck says we also need to know what’s happening in the atmosphere.

This is much more difficult.

But nanosatellites using radio occultation can get an abundance of three-dimensional atmospheric measurements, which Yunck says is critical for studying climate change.

How to Weather the Future

The future of weather prediction is here. Enormous computer models of the Earth’s atmosphere underlie modern weather forecasts, and driven by improvements in data, computers and modeling, today’s five day forecast has become as accurate as the three day forecast was in 1980.

Achieving the next decade’s improvement requires a new paradigm of data, created by small satellites that can generate orders of magnitude more data at lower cost.

GeoOptics CICERO constellation will use GPS radio occultation and other techniques to measure global weather patterns thousands of times per day.

The Gravity of Water

Water is life.

The first step to managing the world’s water resources is to know where they are, and where they are at risk.

From glaciers and icebergs to groundwater and acquifers, water can be detected by its tremendous weight.

NASA’s GRACE satellite (first proposed by GeoOptics founder Tom Yunck, while he was at JPL) has measured the Earth’s gravitational field to produce incredible data on the flow of water around the world.

Now, in cooperation with NASA and partners at Tyvak, we are beginning work on the next gravity satellite constellation that will measure the movement of water across the globe in unprecedented detail.

“Our technique is so precise that our ability to measure temperature in the atmosphere is at least 20 times more accurate than any other known technique in space,” said Yunck.

“So far, it’s the only technique that can observe global warming over a short period of time—months or a few years, as opposed to 5 or 10 or 20 years.”

Links :