Alpha.data.gov, an experimental data portal

created under the White House's Open Data Initiative.

From Slate

His executive order to open government data is a really big deal.

Long before steam engines and turbines carried us swiftly over the oceans, a disabled sailor who could no longer serve on a ship found something to do ashore: aggregate the data from shipping logs.

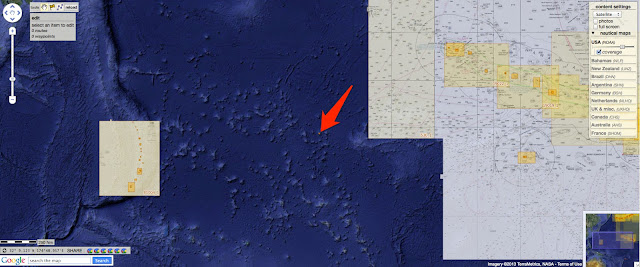

When

Matthew Fontaine Maury (see GeoGarage blog) started analyzing those logs and mapping them onto charts, he found previously invisible patterns in the data that showed patterns in weather, winds, and currents.

He also made a crucial decision for navigators around the world:

After he collected the data, Maury then shipped them to anyone who wanted them, and he asked for contributions in return.

Over time, it became a worldwide project.

Maury saw great value in publishing the data “in such a manner that each may have before him, at a glance, the experience of all.”

Notably, President John Quincy Adams agreed.

Not long afterward, the United States created standards for reporting meteorological data and endowed the U.S. Naval Observatory.

The equal lines of ocean temperature on this chart (sinuous east-west lines) in Physical Geography of the Sea were generated "by actual observations made indiscriminately all over it" (p. 231).

Maury asserted that such information helped to "increase our knowledge concerning the Gulf Stream, for it enables us to mark out,…the 'Milky Way' in the ocean, the waters of which teem, and sparkle, and glow with life and incipient organisms as they run across the Atlantic." (p. 231)

In many ways, Maury's work and the government's codification and release of these data set the stage for the historic moment we find ourselves in.

Around the world, people are still using government weather data when they travel, though few consult nautical charts.

Instead, they tap into the growing number of devices and services that make open data more actionable.

For instance, think about how you use the mapping apps on an iPhone or Android device.

That glowing blue dot places you in time and space, enabling you to know not only where you are but how to get somewhere else.

In more than 450 cities around the world, when you look for mass transit options, the routes and even departure times for the next train or bus show up on that interactive map as well.

GPS constellation :

The first true “Open Data Directive” was a mandate for “Free and Open GPS Signals”.

This was created and championed by President Ronald Reagan in 1983.

The directive from President Reagan was a response to the terrible tragedy of a Korean Airlines flight that sadly strayed into Russian airspace and was shot down.

President Reagan’s altruistic directive, which opened the military’s GPS to the world, provided an amazing opportunity to the private sector that is experiencing its second act 30 years later in the Government 2.0 ecosystem of open data.

The decision to open up GPS provided the ability to create sophisticated navigation systems to prevent future disasters.

The unforeseen consequence of President Reagan’s move was the creation a $250 billion a year navigation industry (including GPS enabled smartphones), millions of jobs, and inspiration to spur the next generation of innovation and economic prosperity in the US.

That glowing blue dot exists because of a series of executive decisions made by Presidents Ronald Reagan and Bill Clinton, who decided to progressively open up the data created by the satellites in the

Global Positioning System to civilian use, enabling a huge number of location-based technologies to make their way into the palms of citizens around the world.

Now, we may see even more life-changing technologies as a result of open government data.

Last week, the White House released an

executive order that makes “open and machine readable” the new default for the release of government information.

Although people who care about open data were generally

quite excited, the news barely made an impression on the general public.

But it should: This is perhaps the biggest step forward to date in making government data—that information your tax dollars pay for—accessible for citizens, entrepreneurs, politicians, and others.

(since November 15th, 2005)

Before the Obama executive order, the openness of this kind of data has been threatened

by the U.S. House of Representatives,

as they explore privatization of NOAA services.

President Barack Obama announced the order on a trip to Austin, Texas, where he met the founder of StormPulse, a startup that uses weather data for risk analysis.

The White House also published a

memorandum that established a framework to institutionalize the treatment of government information as an asset.

"This kind of innovation and ingenuity has the potential to transform the way we do almost everything," said Obama.

MATCH incorporates metadata from six federal agencies’ datasets.

This isn't the first time the nation has heard this kind of rhetoric or initiative, although it was by far the most prominent mention by the president to date.

In 2009, the federal government launched Data.gov as a platform for open data for civic services and economic reuse.

In the years since, dozens of other national and state governments have launched their own open data platforms.

From

health information to

consumer finance, government data are slowly making their way out of file cabinets and mainframes into forms through which they can be put to good use.

Many of these data are of fundamental interest to citizens, from the quality of the food we eat to the efficiency of our appliances to the safety of the cars we drive.

During Hurricane Sandy,

open government data feeds became critical infrastructure, feeding into crisis centers and media maps that amplify them to millions of citizens searching for accurate, actionable information.

While all those efforts laid a foundation, the new executive order is at once more legally binding and specific.

It sends a clear statement from the top that open and machine-readable should be the default for government information.

The White House has also, critically, taken steps to operationalize these open data principles by:

- Mandating that when an agency procures a new computer or system that collects data, those data must be exportable. That won't address digitizing existing government documents and data but will create a default setting going forward.

- Planning to relaunch data.gov in a format compatible with dozens of other open-data platforms around the world.

- Requiring agencies to catalog what data they have. Understanding what you have is fundamental to managing information as an asset, although an open data policy that requires creating and maintaining an enterprise data inventory won't be without cost. Creating a public list of agency data assets based upon audits is one of the most important aspects of the new open data policy.

With this executive order, the president and his advisers have focused on using open data for entrepreneurship, innovation, and scientific discovery.

This executive order, associated tools, and policy won't in and of themselves be enough to achieve the administration's goals, at least with respect to jobs: They'll need entrepreneurs, developers, and venture capitalists to put the open data to use.

Governments looking for economic return on investment must focus

on open data with business value, according to research from Deloitte U.K.

Government release of health, energy, education, transit, and safety data all hold significant economic potential.

As is the case with GPS and weather data, however, government will have to ensure that data remains available to businesses founded upon it.

But advocates of open data also point to another area with great potential: transparency.

With

Data.gov, the Obama administration had promised to make information available so citizens could keep an eye on things.

But some experts in this space are worried that with the emphasis on innovation and economic growth, the transparency element will be forgotten.

The nation's media relies upon Freedom of Information requests and confidential sources, not Data.gov.

Jim Harper, director of information studies at the Cato Institute, praised President Obama’s new open data policy but

questioned its relationship to government transparency.

He writes:

“Government transparency is not produced by making interesting data sets available. It’s produced by

publishing data about the government’s

deliberations, management, and results. Today’s releases make few, if any, nods to that priority. They don’t go to the heart of transparency, but threaten to draw attention away from the fact that basic data about our government, including things as fundamental as the organization of the executive branch of government, are not available as open data.”

These are important questions that the Obama administration must address in the months ahead, although it is, admittedly,

a little busy this week.

Still, the order has the potential to

revolutionize industries, giving people better tools to navigate the world.

While the impact of open government data on democracy depends on functional institutions, the rule of law,

political agency, and press freedom, its impact on the economy could measure in the hundreds of billions over time.

"This memorandum is the most significant advance in information policies by the federal government since the passage of the Freedom of Information Act,”

said open government advocate Carl Malamud, president of

PublicResource.Org.

Government data is a new kind of natural resource that can now be tapped and applied to the

public good.

Links :

- NOAA NCDC open access to physical Climate Data policy (2009)

- NOAA : Technology & Data : NOAA and Partners Deliver New Climate and Health Data Tool to Public

- NOAA : Assessing the economic & social benefits of NOAA data

- Slashdot : President Obama: U.S. Government will make data more open

- Climate Central : NOAA Head: Weather forecasts at risk over budget cuts

- NOAA : Statement from Dr. Kathryn Sullivan on NOAA’s FY 2014 Budget Request