From BoatInternational by Elaine Bunting

Escaping from the world is always possible on a yacht.

Now, says Elaine Bunting, many owners want to live and work aboard for even longer

One of the unforeseen consequences of being locked down for much of 2020/2021 was that owners spent more time on their yachts – if they could get to them.

One-week trips to the boat were being extended to two or three weeks, and some owners even chose to ride out the worst of the pandemic on board.

Charters, too, were affected, with brokers reporting that while bookings were down, charterers were taking trips for longer periods.

This shift in usage has demanded new thinking from the superyacht industry.

“[Covid-19] has brought it home to people that they can spend more time on board if they need to,” says designer Jonny Horsfield.

His company, H2 Yacht Design, is behind long-range yachts such as 63.7-metre explorer vessel Scout and REV Ocean, a 182.9-metre expedition vessel that, when delivered, will become the world’s largest superyacht.

The studio’s portfolio also includes 75-metre Feadship Arrow, launched last summer, and 123-metre Lürssen Al Lusail from 2017.

“Generally speaking, I would say the interesting new yachts are much as they were a year ago but with more comfort, storage and supplies,” he observes.

“People are looking for a more casual solution to comfort rather than concentrating so much on what is normal or good for charters, and they wish to customise a little more.”

Storage, Horsfield says, is often compromised in order to squeeze in extra guest space.

Next-generation yachts fit for longer-term living will need more.

“I don’t think there’s a [specific] figure you need, it’s just more important to get it right than in the past,” he adds.

Read on for more tips for enjoyable longer spells on board.

Upgrade the onboard office

Credit: Burgess

“More people are working remotely, especially now they have seen that it works really well,” says sailing yacht designer Bill Dixon.

“Video conferences have improved immensely so maybe travelling for work will become the second option.

Owners can actually spend time on their yacht and work and earn money.”

At the extreme end of what is possible, REV Ocean is fascinating, designed to operate independently for months in remote areas from the Arctic to the Antarctic, and be interchangeable with an office environment ashore.

“You could run your entire business from that yacht,” says Jonny Horsfield.

“It has a functional business centre and a recreation of the owner’s entire office.

We have allocated a whole office suite and a trading room where you can have six guys on screens, as well as a massive conference room that can seat 35 directors where you can have board meetings – and they can all stay on board. It’s massive, bigger than most companies’ offices.”

The nature of boats was starting to change before the pandemic and this is now happening more rapidly, says Canadian naval architect Gregory Marshall.

“What we are seeing is a lot more self-contained long-range boats being away for four to six months.

This is a definite shift, and it’s across the board from 25 and 26 metres to our largest extended range project of 82 metres and everything in between."

“More than 50 per cent of clients consider their office [in the design] and we have certainly been doing more video conference rooms and more common spaces off a sky lounge rather than the owner’s suite so people can sequester themselves in that.”

Create dual-use spaces to maximise what you can do

Credit: Giuliano Sargentini

A yacht is all about enjoying downtime, family time and holidays, and there will continue to be a need for flexible, multi-use spaces.

Designer Tim Heywood thinks that the experience owners and their families had of isolating on yachts gave some “the opportunity to turn hard times into a diverting and potentially enjoyable experience.”

With that in mind, size matters.

“It is possible to enjoyably isolate aboard a moderately sized yacht of, say, 30 metres, but to take a sizeable family with back-up staff and full health facilities, I would aim for something in the region of 70 to 90 metres with a touch-and-go helipad.”

For longer periods it is paramount, Heywood feels, to have facilities to keep family and guests entertained.

“You need a saloon that can double as a cinema, if you don’t have a cinema planned, and a dining room that is equipped with conferencing facilities.

Children’s play and craft rooms and additional gym equipment are all facilities that should be maximised.”

Credit: Jeff Brown/Breed Media

The 83-metre Amels Here Comes The Sun, 68-metre Amels Neninka (previously Aurora Borealis) and 86-metre Oceanco Seven Seas are examples of superyachts that have cinemas that can be used as lounges, and a growing number of yachts, including recently delivered 80-metre Artefact, feature a play and craft room for children

The same applies outdoors, which can also be set up for multi-use, with more flexible arrangements than the normal fixed sofas and sunbeds, “allowing different layouts to add surprises to every day”, says Heywood.

Laura Pomponi, founder and CEO of Luxury Projects, says that versatile, convertible areas are more in demand than ever.

Lounge areas that can become a cabin, a gym that can be turned into a recreation area or an office, or a beach club that can be a cinema are all examples she cites.

Amenities such as balconies, saunas, spas and gyms are prized, particularly when guests are restricted in going out or have time on their hands, and convertible areas allow the living space to be varied.

Credit: Francisco Martinez

Pomponi and other designers say clients have been asking for a more homely, residential feel.

That means comfy sofas, high-end AV systems and high-speed communications.

It might also mean a different interior style.

“The minimalism we have seen in interiors in last few years with lacquered surfaces and strong contrasts doesn’t work any more,” Pomponi says.

“We are back to veneers, warm light, linen fabrics and calm tones.”

Gregory Marshall agrees that there is a move away from elaborate interior styling, driven by the wish for a cleaner aesthetic.

“In the last few months people have settled into the concept of being germophobes, and that is changing interior design itself,” he observes.

“People want fewer nooks and crannies that are hard to clean.”

Invest in better systems

Credit: Feadship

“Family is one thing. Friends? Well, you know what they say: after a week, friends are like fish, they go off!” says the owner of a 41-metre explorer when asked for his advice on spending long periods on board. You need “a good chef who makes every meal an interesting part of the voyage, and one who takes into account each of the guests’ particular needs.”

Asked what else he would recommend, he replies: “Good separation of owner, guests and crew, tenders that are multifunctional and seaworthy, enough fuel at cruising to cross the Atlantic and Pacific Oceans from, say, the US and full displacement and the ability to handle rough conditions.

Also, living areas that feel warm and look warm in cold climates, and a lot of spares and backups.”

But if good food is essential, the facilities and systems that make it all possible longer term need to be planned in a new build or refit.

In more remote places without regular access to shore, where crew can’t be flying in food from all over the world, thought has to be given to how food would be stored using extended fridges and freezers.

You need more, and bigger, storage, perhaps also rubbish and waste-handling systems, plus bigger tanks for fuel and black water.

.jpg)

Credit: Luxury Projects

Daniel Nerhagen, from Swedish studio Tillberg Design, has worked on the build and refit of superyachts from 72-metre Serenity and 69-metre Saluzi all the way up to cruise ships.

A point he makes is that cold storage allocation for provisions and rubbish is critical for independence but seldom easy to create in an existing vessel.

“On many yachts we have a very long range with fuel to be at sea or at anchor and that is not a challenge.

But most are used to being able to restock every week,” he says.

“With the Covid-19 situation, yachts were parked outside the harbours and crew were not allowed ashore.

That led to challenges, as they didn’t have the space.”

So what can be done on existing yachts?

bis.jpg)

Waste-handling systems area also becoming more sophisticated.

They are widely used on cruise ships, which can generate up to 10,000kg of rubbish and solid waste every day.

“To recycle food waste you can get machines that remove the water and dry the organic material, turning it into powder, and you store that in boxes,” Nerhagen says.

“All these waste-handling and compaction systems are available but you need to allocate space for them.

Most yachts don’t have it and it is hard to retrofit.”Nerhagen observes that new regulations and standards in the cruise industry are resulting in older vessels being scrapped.

Credit: Tom Van Oossanen

Easy, fast access from shore is another essential requirement when living on board for longer periods.

Yachts need to carry good tenders, preferably covered.

For larger yachts travelling to new destinations, or away for longer, a certified helipad becomes important.

“We are definitely seeing much bigger toys on board for going to remote places,” says Gregory Marshall.

“If you want to do a 200-mile trip you need a tender that can do that.

The 82 metre we’re working on packs an 18-metre tender.”

Another consideration for ensuring smooth running for a longer period is crew rotation, which can have an impact on the space set aside for crew quarters.

“It’s not always high on the list of priorities,” says designer Jonny Horsfield, “but you need to think about how the crew are being looked after.

A lot I know are confined to the yacht and it is not great for them so the idea of having more space would be good.”

None of these changes necessarily demands a new build.

Royal Huisman recently redelivered 56-metre Feadship Broadwater with a brief to turn her into “a modern and liveable home from home.” To achieve this, the transom was removed and the yacht extended by four metres and given a restyled stern with an aft deck and beach club.

Outdoor amenities include a new hot tub on the sundeck and a bar.

The yard is working on a conversion of a 43-metre Ron Holland-designed ketch from 1993, Juliet, to hybrid propulsion.

This will allow more silent running and to take advantage of her fuel storage for longer periods away from port.

H2 is working with a client who is looking for more space and wants to lengthen an existing yacht rather than wait for a new build.

The project would cut the yacht in the middle and extend it by around 10 per cent, leaving the engine room intact.

Large doors opening out on to balconies will be added, and more space for the owner, with another suite, cinema, and bigger dining room, while the old spaces will now be office areas.

Get ahead in technology

.jpg)

Besides fostering changes in design thinking, the quest for independence could also drive interest in sustainability.

“This is a big topic and it comes up at every client meeting,” says Daniel Nerhagen from Tillberg Design.

“How can you be self-sufficient? Wind turbines, solar panels, battery packs and fuel cells – how to extend your range or reduce fuel consumption and run the generator for only a few hours per day.

“These systems require more space than traditional diesel engines so it’s always a question of your priorities.” But, he adds: “For many people, the technology is a showcase.

It will accelerate – that’s inevitable.”

Bill Dixon, of Dixon Yacht Design, specialises in sailing yachts, such as the 55-metre Dixon 175 and the 70-metre Project NewDawn.

The latter is something of a crossover, a sailboat with an easily handled free-standing rig that has similar accommodation to a motor yacht of the same size, and for a comparable cost.

Technology that regenerates power from self-propulsion is the future, Dixon believes.

“Where it really does key into self-sufficiency is that, if you have some sail power, you have a way of generating electricity.

As we know now, Black Pearl nearly runs the vessel if it is sailing at a reasonable wind speed.

I believe we will see a lot more of that.

People like the idea."

“Then there is taking the energy you generate and making hydrogen for fuel,” he adds.

“On one of my potential projects, a client has already investigated fuel cell technology.

In the scheme of things, a large yacht of 100 metres can go fast and generate a lot of free energy.

If you really want to do something incredibly special, this is the way to look at one future for large, eco-friendly superyachts.”

Explore new places

Don’t bet on it; you just have to look at the huge uptake in private jets.

“I think people will want to travel but to new destinations, which is why the range and ability to be away for longer is important,” says Dixon.

If anything it’s possible there could be an upsurge in the desire to spend more time exploring and visiting new places or more unusual destinations.

“Owners are not giving up free time; they need their holidays.

Leisure time is important,” agrees Daniel Nerhagen.

“A yacht is still probably the safest place you could be and where you can escape all the current situation.”

A yacht that has been refitted or built to create the right environment and spaces for longer-term living, working and relaxation will allow owners and guests to take maximum advantage of the new working-from-home culture.

Could such configurations eventually become a new norm?

.jpg)

“It is its own bubble,” remarks the owner of his 55-metre explorer yacht, who spends months at a time on board with his family.

“It protects you from some parts of the world and allows you to build a strong family bond.

There is no greater luxury than being on a yacht.”

Links :

- GeoGarage blog : A $50 million boat for the toys you can't fit on your yacht / Trade wars even scare the megarich – just look at Superyacht sales / Blue Planet gives super-rich their new toys – submersibles / Exploring the Arctic circle on board scientific superyacht Yersin / The $1 billion superyacht: Bigger, longer, but is it better? / World's largest yacht launched: the 183m REV Ocean / Norwegian billionaire funds deluxe deep ocean research ship

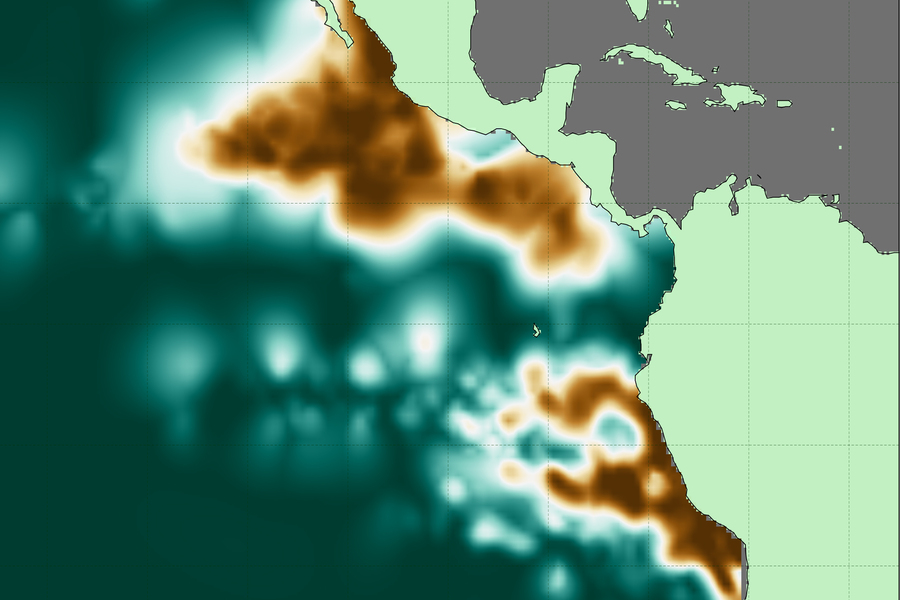

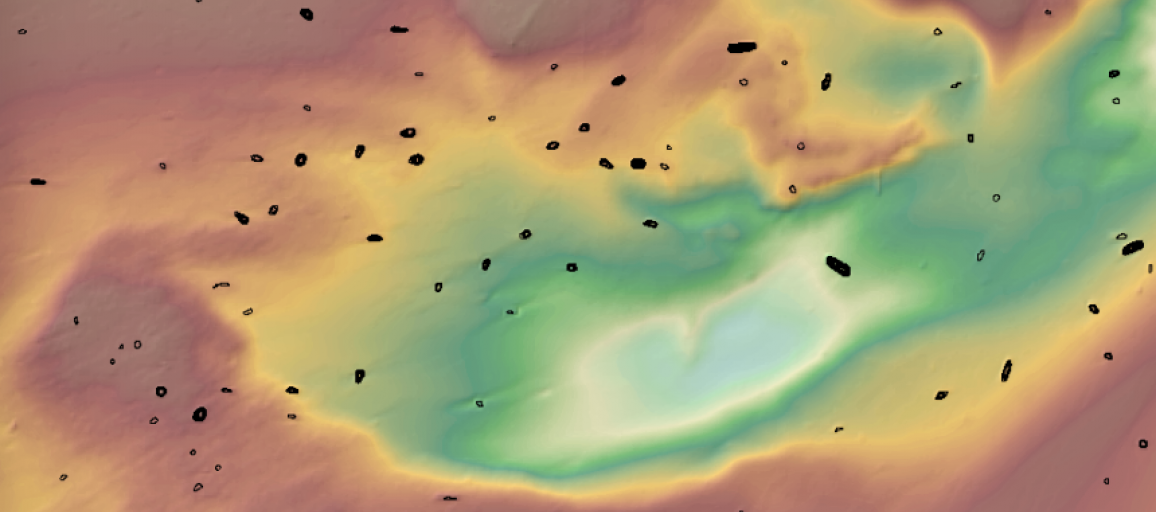

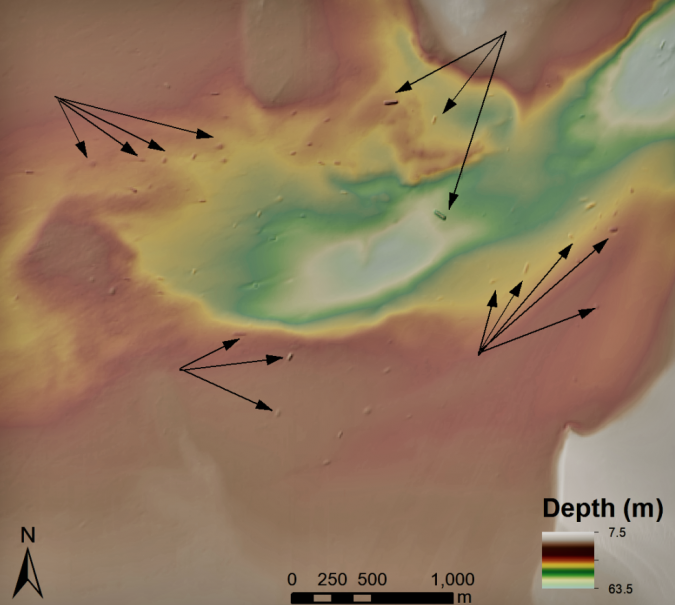

Figure 1: Shipwrecks visible in bathymetric data from the National Oceanic and Atmospheric Administration in the United States. Arrows highlight some of the larger visible shipwrecks.

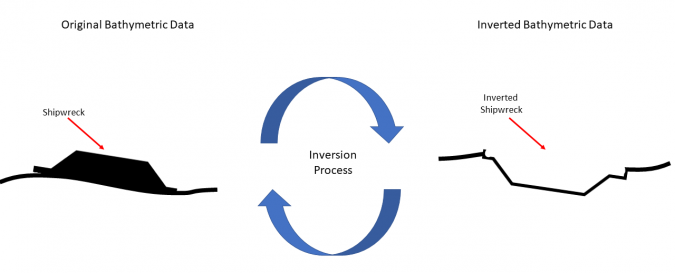

Figure 1: Shipwrecks visible in bathymetric data from the National Oceanic and Atmospheric Administration in the United States. Arrows highlight some of the larger visible shipwrecks. Figure 2: Illustration of how inverting bathymetric data works for detecting shipwrecks.

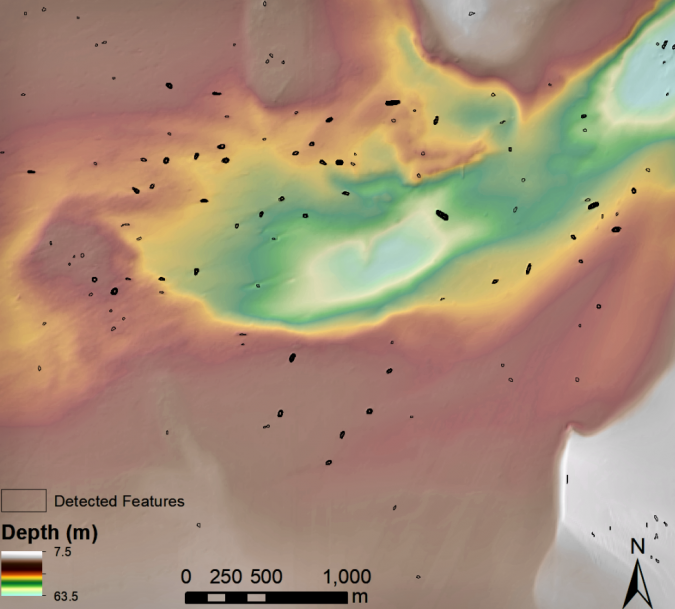

Figure 2: Illustration of how inverting bathymetric data works for detecting shipwrecks. Figure 3: Example of detected shipwreck locations in the United States using sinkhole extraction algorithms. Credit: Dylan Davis, Danielle Buffa & Amy Wrobleski (2020).

Figure 3: Example of detected shipwreck locations in the United States using sinkhole extraction algorithms. Credit: Dylan Davis, Danielle Buffa & Amy Wrobleski (2020).