Highlights from ocean.org, the Ocean Wise story-telling platform dedicating to telling ocean stories, and highlighting ocean issues.

From the most ancient animal known to a newly defined ocean zone, the world’s watery places never cease to amaze

As 2018 draws to a close, we look back on the studies, expeditions and stories that carried forward our knowledge and understanding of the world’s oceans—the lifeblood of the planet.

It was a year filled with triumphs, from the first successful revival of coral larvae following cryofreezing, to an optimistic progress report for the Chesapeake Bay’s restoration, to global awareness about single-use plastic straws.

It was also a year of discovery.

We learned of a shark that chows on greens, an entire new ocean zone teeming with life, and one of the earliest animals to ever live here on Earth.

The year also had its moments of grief and distress in the seas.

Noxious red tides, right whale populations that continue to decline, and the passing of a coral reef science legend are also on our minds as we look back at the oceans of 2018.

The following list of the year’s top ten ocean stories—the unique, troubling, perplexing and optimistic—was curated by the National Museum of Natural History’s Ocean Portal team.

An Odorous Stench

Red tide algae blooms on the coast of Florida.

(NOAA)

For those living in or visiting Florida this year, you may have noticed a particularly noxious stench lingering in the air.

This year the coastal waters of Florida are experiencing one of the worst red tides in recent history.

The tide is caused by a bloom of algae that feed on nutrient-rich runoff from farms and fertilized lawns.

Over 300 sea turtles, 100 manatees, innumerable fish and many dolphins have been killed by the noxious chemicals expelled by the algae.

Humans, too, can feel the effect of the fumes that waft onto the land, and beaches have closed because of hazardous conditions.

Many see this as a wake-up call for better management of the chemicals and nutrients that fuel the harmful algae’s growth.

Evolutionary Steps

Researchers first discovered Dickinsonia fossils back in 1946.

Evolution produces some wonderous marvels.

Scientists determined that the creature called Dickinsonia, a flat, mushroom-top-shaped creature that roamed the ocean floor roughly 580 million years ago, is the earliest known animal.

Examining the mummified fat of a particular fossil, the scientists were able to show that the fat was animal-like, rather than plant-like or fungi-like, thus earning it the animal designation.

We also learned that baleen whales may have evolved from a toothless ancestor that vacuumed its prey in the prehistoric oceans of 30 to 33 million years ago.

Today, evolution is still at work, and the adaptability of life continues to amaze.

A study of the Bajau “Sea Nomad” people’s DNA show that a life at sea has changed their DNA.

This group of people, who can spend over five hours underwater per day, have alterations in their genetics that help them stay submerged for longer.

Marvels in Plain Sight

Up to 1,000 octopus moms care for their brood.

(Phil Torres / Geoff Wheat)

Once again, we were reminded that as land dwelling creatures, humans miss out on many of the ocean’s everyday wonders.

Although we know from museum specimens that the male anglerfish latches onto the female like a parasite and sucks nutrients from her blood, the infamous duo has never been caught in the act—until now.

This year, a video was released showing the male anglerfish paired with his lady counterpart.

And though sharks are known for their carnivorous appetites, a new study shows even these marine predators will eat leafy greens.

About 60 percent of the bonnethead shark’s diet consists of seagrass, upending the idea that all sharks are primarily carnivores.

Also, scientists discovered not one, but two, mass octopus nurseries of up to 1,000 octopus moms deep underwater.

The second discovery assuaged doubts that the initial discovery was a case of confused octomoms, as octopuses are known to be solitary creatures.

Now, scientists are determining if volcanic activity on the seafloor provides some benefit to the developing brood.

Futuristic Resurrection

Adult Mushroom Coral (Smithsonian Conservation Biology Institute)

The field of coral reef biology has weathered some hard times these past years, and while this year saw the unfortunate death of a coral reef conservation legend, Dr.

Ruth Gates, it also brought us a glimmer of hope.

For the first time, scientists were able to revive coral larvae that were flash frozen—a breakthrough that may enable the preservation of endangered corals in the face of global climate change.

Previously, the formation of harmful ice crystals destroyed the larvae’s cells during the warming process, but now the team has devised a method that uses both lasers and an antifreeze solution infused with gold particles to rapidly heat the frozen larvae and avoid crystal formation.

Soon after thawing, the larvae are able to happily swim about.

We now live in a world where oceans frequently spike to temperatures too hot for corals, and scientists hope that preserving them may buy time to help corals adapt to the rapidly changing environment.

The Impacts of Ocean Warming

Rising temperatures and diminishing oxygen levels in the oceans are a threat to all kinds of marine life.

Just this month a study showed that the mass die off of species at the end of the Permian period, over 250 million years ago, was caused by a rapid increase in temperature and subsequent loss of oxygen in the ocean.

The oxygen deprivation caused an astounding 96 percent of ocean creatures to suffocate.

The cause of this extinction event had been long-debated, but this recent research indicates just how impactful our current climate change trajectory could be—the ocean has already lost 2 percent of its oxygen in the last 50 years.

Plastic Straws Make the News

States and companies alike take steps to reduce the use of plastic straws.

(Pixabay)

Straws make up an estimated 4 percent of plastic waste in the ocean, and though only a sliver of our plastic problem, the single use items are now a hot issue.

A shocking video that featured the removal of a straw from the nose of an Olive Ridley sea turtle seemed the catalyst for a straw revolution this year.

Despite the video being several years old (the original was posted in 2015), it helped spark pledges from a number of companies like Starbucks and American Airlines to eliminate single-use plastic straws.

Even cities, states and countries are talking about banning the ubiquitous pieces of plastic—California was the first state to enact such a rule in September, requiring that plastic straws only be provided when requested by a customer.

By not banning them outright, those with disabilities who require a straw can still enjoy their favorite drinks.

Hope for the Chesapeake Bay

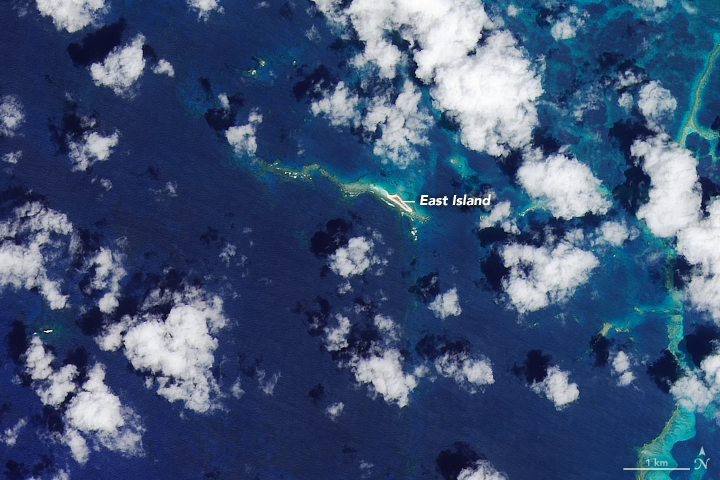

An effort to restore eelgrass beds along Virginia's Eastern Shore began in 2000 with a few seeds from the York River.

Today, these seagrass meadows have grown to 6,195 acres.

It’s not all bad news—especially for the Chesapeake Bay, an estuarine system that spans the states of Maryland and Virginia and is an important ecosystem for all of the mid-Atlantic region.

After decades of decline for seagrass, the vital plants are staging a comeback.

Reductions of nitrogen and phosphorous have brought seagrasses cover back to an area that is four times larger than what’s been found in the region since 1984.

Seagrass is vital to the life cycle of the economically significant blue crab, which has been threatened for years but currently has a healthy population despite some setbacks.

Groups are also working to return ten billion oysters to the bay, and tiny oyster spat seem to be thriving despite the danger of recent freshwater influxes.

The recovery could even be a model for similar ecosystems in parts of the Gulf of Mexico and elsewhere.

A New Ocean Zone

Curasub owner Adriaan Schrier and lead DROP scientist Carole Baldwin aboard the custom-built submersible.

(Barry Brown)

Just like the layers of the atmosphere, scientists describe layers of the ocean based on the animals who live there and how much light is present.

This year, there was a new addition thanks to work from Dr.

Carole Baldwin, a research zoologist at Smithsonian’s National Museum of Natural History.

Her team conceived of the rariphotic zone when they realized that the fish found there were not the same as those in the shallower mesophotic zone.

The newly recognized rariphotic zone ranges from 130 meters to at least 309 meters deep (427-1,014 feet).

It is too deep for corals with photosynthetic algae to grow, and it is also too deep to reach with the specialized SCUBA equipment used to explore mesophotic reefs.

Submersibles and remotely operated vehicles can explore the region, but they are expensive and generally used to scope out even deeper depths of the ocean.

As a result, most reef researchers rarely make it to the rariphotic zone.

Baldwin manages to visit it often with the help of a deep-sea submersible, the Curasub, through the Deep Reef Observation Project based at the National Museum of Natural History.

No Calves for North Atlantic Right Whales

North Atlantic right whales are in peril, but changes to shipping routes and lobster trap design could help the large marine mammals make a comeback.

(Public Domain)

With just over 400 individuals remaining in the North Atlantic right whale population, this endangered species is on the brink.

Early in 2018 scientists announced that there had been no right whale calves sighted after the winter breeding season.

Changes to shipping lanes and speed limits over the past decade have helped reduce ship strikes, but entanglement in fishing gear has remained a problem—17 right whale deaths in 2017 were caused by entanglement.

But scientists still have hope.

There were only three recorded deaths in 2018, and the whales are now making their way back into North Atlantic waters.

We’ll keep our fingers crossed for a baby boom in 2019.

A Twitter Moment

1971 International Conference on the Biology of Whales.

(NOAA)

Social media has its downsides, with distractions and in-fighting, but it can also produce some pretty magical moments.

We watched in real time in March of this year as the search unfolded for an unidentified young woman in a photo from the International Conference on the Biology of Whales held in 1971.

An illustrator in the midst of writing a book about the Marine Mammal Protection Act, legislation from 1972 that protects marine mammal species from harm and harassment, came across the image with one African American female attendee who was practically hidden and had no name listed in the caption.

Who was this pioneer in a field dominated by white men?

The illustrator took to Twitter for help and the search was on.

Unfolding over several days, leads came and went, and the woman was eventually identified as Sheila Minor (formerly Sheila Jones) who at the time of the photo was a biological technician at the Smithsonian’s National Museum of Natural History.

Even as scientists continue to make astounding discoveries in the watery depths of the world, some of our most important findings have been right here with us all along.