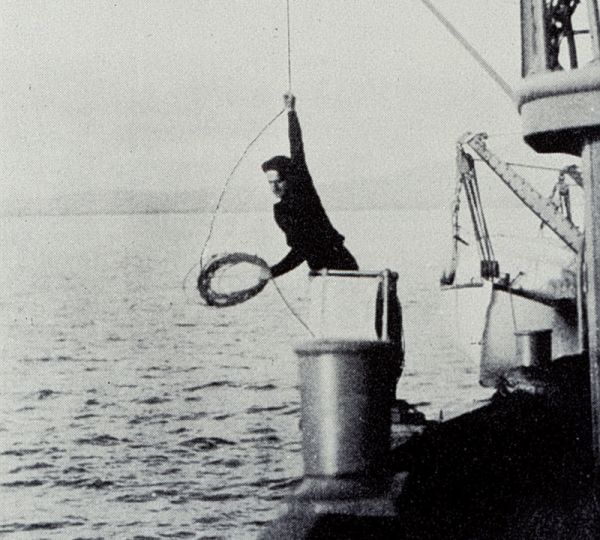

(Photo: 1928 and 1931, Hydrographic Manual)

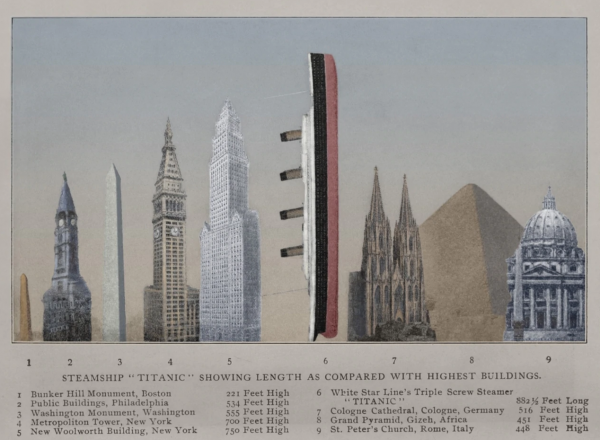

From Hydro

The profession of hydrographer is built upon measurement accuracy.

Ever since Lucas Janszoon Waghenaer produced the first true nautical charts in 1584, hydrographers have been working to improve the accuracy of their measurements.

For anyone fortunate enough to have reviewed Waghenaer’s atlas, the 'Spieghel der Zeevaert' (The Mariner’s Mirror), one of the first questions that comes to mind is how accurate are these charts?

However, two soundings stand out as indicative of both the relative accuracy of his data and Waghenaer’s integrity.

Study of Waghenaer’s charts reveals that there are few soundings deeper than 30 fathoms: one 60-fathom sounding, and one anomalous nearshore sounding of 200 fathoms.

This 200-fathom sounding was the final sounding of a line of soundings that ended with 7, 50, and then 200 fathoms.

Was this a blunder on Waghenaer’s part, an error on the part of the engraver, or indicative of the real configuration of the seafloor?

Comparison with a modern bathymetric map gave the answer.

These two soundings were made at the head of Subetal Canyon – a large canyon incising the Portuguese continental shelf and slope.

The soundings are convincing evidence of Waghenaer’s integrity as a hydrographer and also his commitment to producing charts that were as accurate as the technology of the times permitted.

Waghenaer's Chart No. 17.

Waghenaer's Chart No. 17.It is oriented with North to left.

200 fathom sounding on north wall of Subetal Canyon is to east of Cape Spichel.

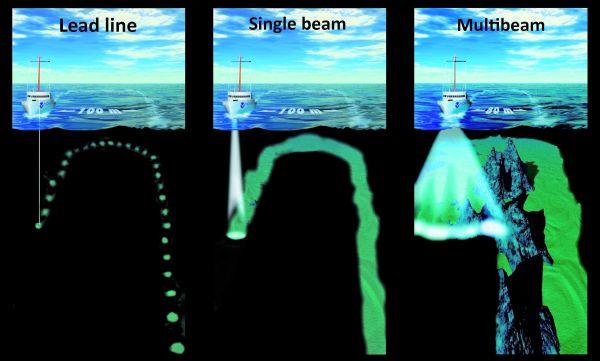

For the next 340 years, little was done to improve the accuracy of depth sounding instrumentation.

The hydrographer was constrained to using the lead line.

However, positioning technology began improving in the eighteenth century with the invention of octant, sextant, chronometer and station pointer.

These inventions, coupled with the evolving understanding that depth information had to be placed in the same geographic framework as the shoreline and landmarks shown on charts, led to a quantum leap in the accuracy of charts.

This new understanding was driven by the work of such British hydrographers as Murdoch Mackenzie Senior, Murdoch Mackenzie Junior, Graeme Spence and the incomparable James Cook.

Triangulation Network

In 1747, Mackenzie Senior was the first hydrographer to develop a local triangulation scheme during his survey of the Orkney Islands.

In 1750, he wrote, “The lives and fortunes of sea-faring persons, in great measure, depend on their charts.

Whoever, therefore, publishes any draughts of the sea-coast is bound in conscience, to give a faithful account of the manner and grounds of performance, impartially pointing out what parts are perfect, what are defective, and in what respects each are so….”

The weakest link in this methodology was the use of compass bearings to position the sounding boat.

Murdoch Mackenzie rectified this situation in 1775 with the invention of the station pointer, or as it is known in the United States, the three-arm protractor.

This instrument allowed hydrographers to plot three-point sextant fixes which resulted in horizontal accuracies of less than ten metres within the bounds of the triangulation scheme.

By 1785, George Vancouver was able to report regarding his survey of the Kingston, Jamaica harbour that, “The positive situation of every point and near landmarks as well as the situation and extent of every Shoal has been fixed by intersecting Angles, taken by a sextant and protracted on the Spot, the Compasses only used to determine the Meridian, and observe its Variation.”

Swiss Precision

The United States began making contributions to the sciences of geodesy and hydrography in 1807 with the beginnings of the Survey of the Coast of the United States.

The Swiss immigrant, Ferdinand Hassler, was chosen to head this embryonic organization.

He combined the talents of mathematician, geodesist, metrologist, instrument-maker, linguist, and even legal expert.

Not only had he worked on the trigonometric survey of Switzerland, he had also served as its attorney general in 1798.

Hassler procured books and instruments for the Coast Survey between 1811 and 1815 and then started the field work in 1816.

In 1818, a law was passed forbidding civilians from working on the survey.

Hassler retired to a home in western New York for the next 14 years until recalled to head the survey again.

In 1825, he published Papers on Various Subjects connected with the Survey of the Coast of the United States in the Transactions of the American Philosophical Society.

This document served as the philosophic underpinning of the work and role of the Coast Survey.

(Photo: NOAA)

The twin themes of standards and accuracy permeate the ‘Papers’.

The word ‘standard’ is used as either noun or verb over 60 times.

The word ‘accurate’ or its derivative forms have over 120 occurrences throughout the document.

These simple words, combined with the inherent integrity of Ferdinand Hassler, were the foundation of the Survey of the Coast.

Hassler addressed the concept of accuracy in the ‘Papers’ with many practical examples of means to eliminate or reduce error and thus increase the accuracy of the final results of observation and measurement.

But he made a philosophical leap when he stated: “Absolute mathematical accuracy exists only in the mind of man.

All practical applications are mere approximations, more or less successful.

And when all has been done that science and art can unite in practice, the supposition of some defects in the instruments will always be prudent.

It becomes therefore the duty of an observer to combine and invent, upon theoretical principals, methods of systematical observations, by which the influence of any error of his instruments may be neutralized, either by direct means, or more generally by compensation.”

Without such a method, and a regular system in his observations, his mean results will be under the influence of hazard, and may even be rendered useless by adding an observation, which would repeat an error already included in another observation.”

The Law of Human Error

Two surprisingly modern concepts that Hassler addressed were personal bias in the observation of physical phenomena and the effect of personal comfort, in other words ergonomics, upon the observer’s results.

He was among the first to take steps to mitigate various systematic errors and analyse their causes.

Further advances in understanding were made by Benjamin Peirce, head of Coast Survey longitude operations in the 1850s, and Charles Schott, the chief mathematician and geodesist of the Coast Survey.

Their work, like Hassler’s, was related to terrestrial positioning problems but ultimately this led to more accurate nautical charts.

Plotting a three-point sextant fix.

Peirce began with the supposition that with only one observation of a physical quantity, that observation must be adopted as the true value of the constant.

However, “A second observation gives a second determination, which is always found to differ from the first.

The difference of the observations is an indication of the accuracy of each, while the mean of the two determinations is a new determination which may be regarded as more accurate than either.”

As more and more observations are acquired: “The comparison of the mean with the individual determinations has shown, in all cases in which such comparison has been instituted, that the errors of … observation are subject to law, and are not distributed in an arbitrary and capricious manner.

They are the symmetrical and concentrated groupings of a skillful marksman aiming at a target, and not the random scatterings of a blind man, nor even the designed irregularities of the stars in the firmament.

This law of human error is the more remarkable, and worthy of philosophic examination, that it is precisely that which is required to render the arithmetical mean of observations the most probable approach to the exact result.

It has been made the foundation of the method of least squares, and its introduction into astronomy by the illustrious Gauss is the last great era of this science.”

Peirce continued: “If the law of error embodied in the method of least squares were the sole law to which human error is subject, it would happen that by a sufficient accumulation of observations any imagined degree of accuracy would be attainable in the determination of a constant; and the evanescent influence of minute increments of error would have the effect of exalting man’s power of exact observation to an unlimited extent.

I believe that the careful examination of observations reveals another law of error, which is involved in the popular statement that ‘man cannot measure what he cannot see’.

The small errors which are beyond the limits of human perception, are not distributed according to the mode recognized by the method of least squares, but either with the uniformity which is the ordinary characteristic of matters of chance, or more frequently in some arbitrary form dependent upon individual peculiarities – such, for instance, as an habitual inclination to the use of certain numbers.

On this account it is in vain to attempt the comparison of the distribution of errors with the law of least squares to too great a degree of minuteness; and on this account there is in every species of observation an ultimate limit of accuracy beyond which no mass of accumulated observations can ever penetrate.”

Improving the Methods

“A wise observer, when he perceives that he is approaching this limit, will apply his powers to improving the methods, rather than to increasing the number of observations.

This principle will thus serve to stimulate, and not to paralyse effort; and its vivifying influence will prevent science from stagnating into mere mechanical drudgery....”

Peirce’s words are at the heart of modern hydrographic surveying.

With the coming of electronic systems, the need to analyse and understand sources of error and the limits of accuracy of those various systems has been of paramount importance.

From the early twentieth century onward, generations of hydrographers have studied the limitations of and made improvements to their navigation systems and sounding systems.

They have not ‘stagnated’, but instead have concentrated their efforts on ‘improving of methods’.

One wonders what our future ‘ultimate limit of accuracy’ will be.

(Courtesy: NOAA)

- GeoGarage blog : The hydrography of the former Zuiderzee

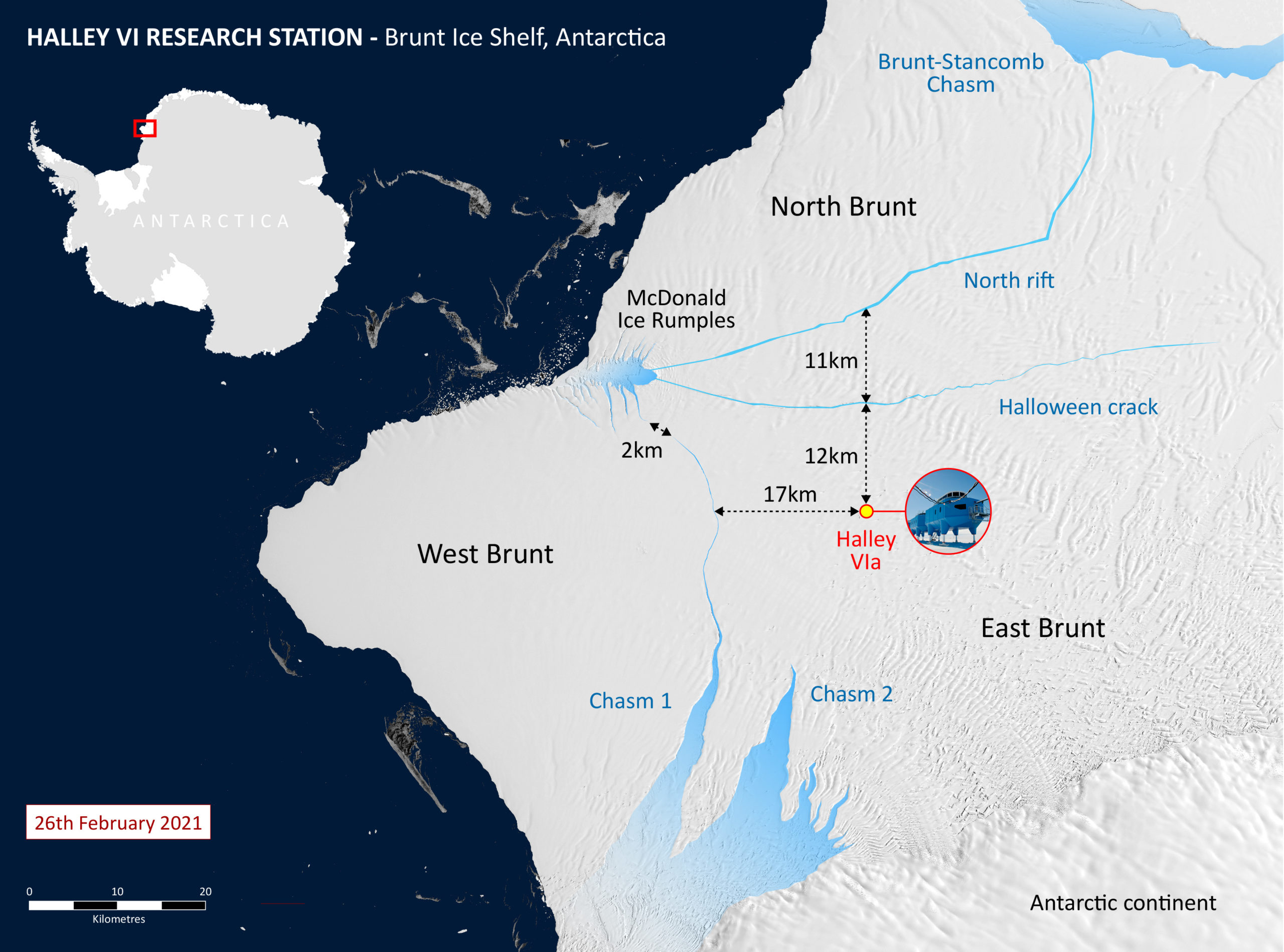

North Rift crack photographed by Halley team in January 2021

North Rift crack photographed by Halley team in January 2021 Map of Brunt ice shelf and Halley Research Station

Map of Brunt ice shelf and Halley Research Station